Machine Learning#

Silicon Labs natively supports TensorFlow Lite for Microcontrollers (TFLM) in the GSDK. TensorFlow is one of the most widely used neural network development platforms. Silicon Labs also supports other Machine Learning (ML) methods through the use of third-party software tools and solutions.

This page will help you decide:

What software and tools are most applicable to you and your application.

What examples, demos, and/or tutorials are available to help you get started.

For a general overview of Silicon Labs' Machine Learning approach and product offerings, see Silicon Labs Machine Learning Page.

For more information on the GSDK support of TFLM, see TensorFlow Lite for Microcontrollers.

Third-party software tools and solutions may provide their own inference engines, unless they leverage the GSDK integrated TFLM.

For a more in-depth explanation of Machine Learning including discussion about, models, deploying models, and some key challenges, please see UG103.19 Machine Learning Fundamentals

Machine Learning and the Development Workflow#

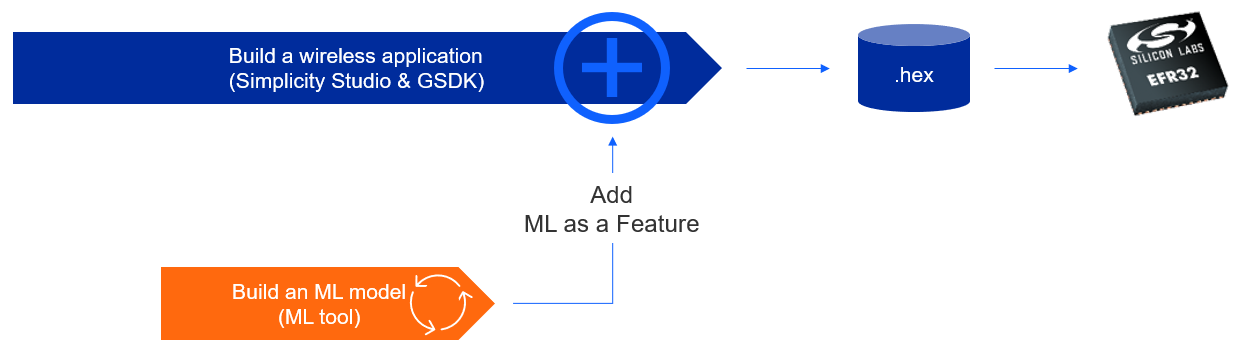

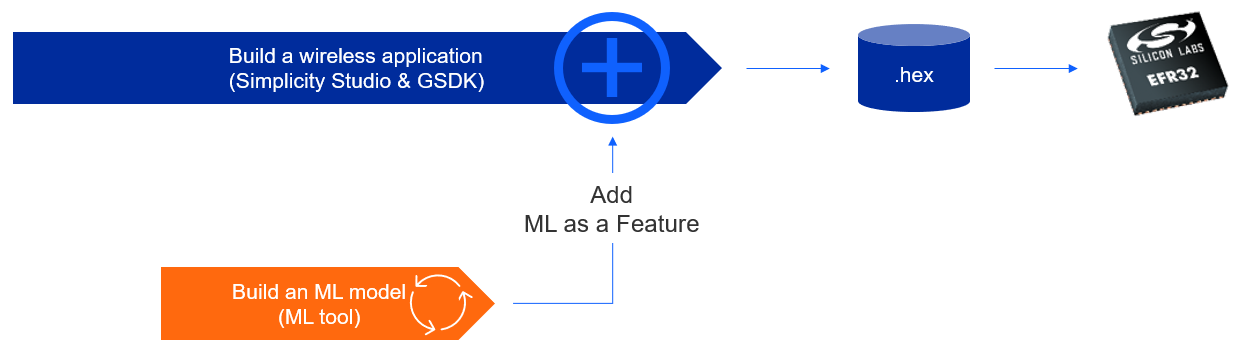

Developing an application that incorporates machine learning as a feature requires two distinct workflows:

The embedded application development workflow used to create a wireless application (with Simplicity Studio or your favorite IDE).

The machine learning workflow used to create the machine learning feature that will be added to the embedded application.

Familiarity with the standard embedded application development workflow is assumed. The tools presented on this page cover the various options for developing the machine learning feature.

How to Build a Machine Learning Application for Silicon Labs Parts#

Depending on the tool selected, different sets of developer skills are required to use it.

For the ML Expert, Silicon Labs provides native TensorFlow Lite for Microcontrollers (TFLM) support. Silicon Labs also provides a Python reference package that combines and simplifies all the necessary TensorFlow training steps. Knowledge about two aspects of machine learning are required for these tools:

Training a model. There are two options to consider: use TensorFlow directly, or use the Silicon Labs Machine Learning Toolkit (MLTK).

Adding the model to an application and running inference. There are examples provided to help get started.

Training with TensorFlow#

The GSDK supports TensorFlow Lite for Microcontrollers natively. The developer can create a quantized

.tflite model file using the TensorFlow environment and integrate it into the

GDSK. See how TensorFlow Lite for Microcontrollers is

supported in the GSDK for more information.

If you use TensorFlow directly to create a model, note the following:

Make sure the feature extraction used while training on your PC is EXACTLY the same as the feature extraction used in the embedded device. See how the MLTK addresses this problem for the audio use case for more discussion.

Use the MLTK Profiler Utility to determine the RAM and Flash requirements of your TensorFlow Lite for Microcontrollers model (

.tflite).

Getting Started

Developing a Model Using TensorFlow and Keras (docs.silabs.com)

Training with the Machine Learning Toolkit#

The Machine Learning Toolkit (MLTK) is a set of Python scripts that implement a layer over TensorFlow to help the TensorFlow developer build models that can be successfully deployed on Silicon Labs chips. These scripts are a reference implementation for the audio use case, which includes the use of a modified version of the TensorFlow audio front end. Support for any other use case is the responsibility of the developer.

Here are reasons why a TensorFlow developer may want to use the MLTK.

Note: This package is made available as an open source, self-serve reference supported only by the on-line documentation and community support. There are no Silicon Labs support services for this software at this time.

Requires:

An existing data set that is cleaned, classified, and optionally labeled.

Deep understanding of TensorFlow model development (how to pre-process the raw data and attenuate the key elements, how to create the proper network of convolutional computations, and how to interpret the constant output of stochastic information from inferencing).

Skill with using Python Scripts.

The MLTK can be used in two ways:

Use the MLTK to train, validate, and prepare the model to be integrated into a GSDK-based application.

Use the MLTK Profiler to determine if your

.tflitemodel will fit on a specific chip by Using the MLTK Profiler Utility.

Getting Started

To get started, use the Machine Learning Toolkit (MLTK) to create a model and use it in one of the GSDK provided examples.

Create a Keyword Spotting Model for a GSDK Example (GitHub.io) - MLTK Tutorial

Add the Model to an Application and Running Inference#

The trained model, whether using TensorFlow or the MLTK, is a .tflite file. To use the .tflite file, add machine learning to your application.

Getting Started

Follow the link below for GSDK examples that include machine learning and show how to run inference using pre-trained models. The pre-trained models can be replaced with a model trained from one of the methods above.

Sample Applications Provided with GSDK, including:

Voice Control Light - keyword detection to control an on-board LED

Z3SwitchWithVoice - voice control of a Zigbee 3.0 switch

TFLM Hello World - the TFLM example ported to GSDK

TFLM Micro Speech - the TFLM example ported to GSDK

TFLM Magic Wand - the TFLM example ported to GSDK

Third Party Partner Tools#

For an ML Explorer, these tools cover the end-to-end workflow for creating a machine learning neural network model and accompanying embedded software to include in your application. They offer:

An easy-to-use graphical user interface.

An easy way to capture and label data.

A tool that will run through a sequence of finding the best model based on the requirements/limitations, commonly referred to as AutoML.

A tool that simplifies the verification and conversion steps.

Requires:

Understanding of machine learning concepts (date collection, training, verification, inference, confusion matrix, and so on).

Ability to collect data that represents the real world scenarios for your product deployment.

The following tools fit well with developing ML as a feature for a Silicon Labs-based embedded application.

Edge Impulse#

Edge Impulse is ushering in the future of embedded machine learning by empowering developers to create and optimize solutions with real-world data. They are making the process of building, deploying, and scaling embedded ML applications easier and faster than ever, unlocking massive value across every industry, with millions of developers making billions of devices smarter.

Getting Started

Using Edge Impulse Studio with the xG24 Dev Kit

SensiML#

SensiML offers cutting edge AutoML embedded code generation software for implementing AI at the IoT edge. With SensiML Analytics Toolkit, developers have an AI development tool which supports rapid data collection, labeling, feature extraction, ML classification and optimized firmware code generation. Automation built into the tool drastically reduces development time and cost, allowing projects ranging from single users to large teams to generate optimized edge AI sensor algorithms in a fraction of the time that would have otherwise been required with hand coding.

Getting Started

Using SensiML Analytics Toolkit with the xG24 Dev Kit

MicroAI#

MicroAI is an endpoint-based artificial intelligence and machine learning engine that lives directly on a device. Aggregating and ingesting directly from connected devices, MicroAI analyzes data locally from the microcontroller (MCU). MicroAI algorithms are then used to create behavioral profiles of the connected assets to establish baselines for optimal performance. With continued, constant monitoring of assets, alerts are reported in real-time highlighting abnormal behavior. The asset-centric approach of MicroAI thus enables OEMs, asset operators, and operational stakeholders to monitor and understand their devices as it relates to performance and security goals most effectively.

Third Party Partner Solutions#

These tools do not require any knowledge of machine learning to take full advantage of their features. They are designed for specific use cases.

Wake Word/Keyword Spotting#

Coming Soon

Choosing a Machine Learning Tool Based on Use Case#

This section provides getting started links for tools available or suggested, based on use case. The links provided may be any one of and an example, demo, or tutorial.

Sensor Signal Processing#

Sensor signal processing is the use of low data rate sensors, like accelerometers, gyroscopes, air quality sensors, temperature sensors, pressure sensors, and so on. These use cases can be found in many types of markets, such as preventive maintenance, medical devices or smoke detectors.

Magic Wand (github) - TensorFlow Example

Predictive Maintenance Demo (video 5 min) - SensiML Demo

Build the Predictive Maintenance Demo (tutorial video 80 min) - SensiML Tutorial

Audio Pattern Matching#

Audio pattern matching uses a microphone to detect different sounds. The types of sounds detected can be a wide range of non-speech related sounds, such as squeaky bearing, jingling keys, breaking glass, water running, animal sounds, and so on.

See Audio Classifier - GSDK Platform Example

Create a Keyword Spotting Model for a GSDK Example (GitHub.io) - MLTK Tutorial

This tutorial is for keyword spotting, but it can be used for audio classification because the steps are the same.

Creating an Acoustic Smart Home Sensor (blog w/2 min video) - SensiML Demo

Introduction to Building an Audio Classifier (video 10 min) - Edge Impulse Tutorial Introduction

Building and Audio Classifier (video 70 min) - Edge Impulse Tutorial

Voice Command#

Voice commands is a specific sub-set of audio patterns that are the recognition of a small set of spoken words. This is also referred to as keyword spotting. It can be used in a variety of use cases, such as waking up a voice service (i.e., Alexa), or using voice activation for smart home devices or appliances.

See Z3SwitchWithVoice - GSDK Zigbee Example

This example shows how to use ML with a wireless stack (Zigbee).

See Voice Control Light - GSDK Platform Example

Create a Keyword Spotting Model for a GSDK Example (GitHub.io) - MLTK Tutorial

This tutorial can be used for either example above to create a model (i.e.,

.tflitefile).

Building a Speech Recognition Demo (video 90 min) - Edge Impulse Tutorial

Low-Resolution Vision#

Low resolution vision uses a camera with resolution around 100x100 to detect objects for presence detection, people counting, video wake-up or more.

People Counting Using Object Detection (Tutorial video 30 min) - Edge Impulse