Machine Learning#

Silicon Labs natively supports TensorFlow Lite for Microcontrollers (TFLM) in the GSDK. TensorFlow is one of the most widely used neural network development platforms. Silicon Labs also supports other Machine Learning (ML) methods through the use of third-party software tools and solutions.

This page will help you decide:

What software and tools are most applicable to you and your application.

What examples, demos, and/or tutorials are available to help you get started.

You may also want to visit:

silabs.com/ai-ml for a general overview of Silicon Labs' Machine Learning approach and product offerings.

docs.silabs.com/TFLM overview for more information on the native GSDK support of Tensorflow Lite for Microcontrollers.

siliconlabs.github.io/mltk for the Silicon Labs Machine Learning Toolkit, one of several tool options mentioned below, an open-source, self-serve, community supported, python reference package for Tensorflow developers.

UG103.19-Machine Learning Fundamentals for a more in-depth explanation of Machine Learning including discussion about, models, deploying models, and some key challenges.

Machine Learning and the Development Workflow#

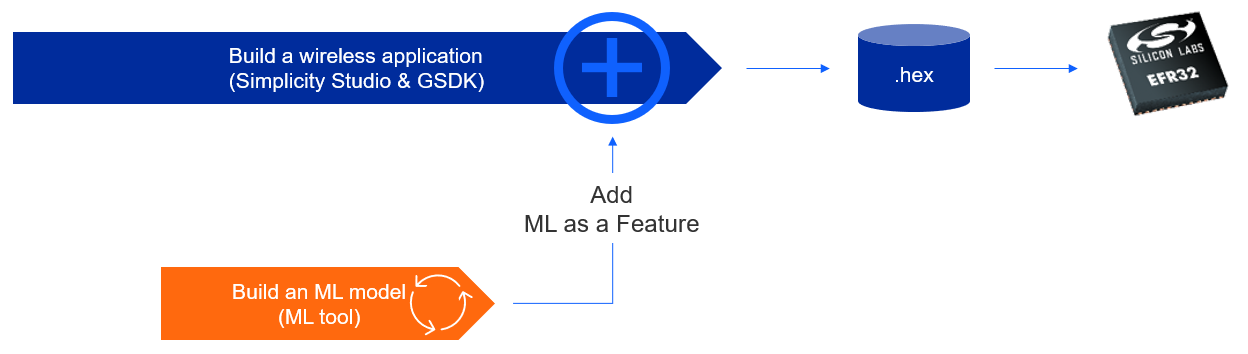

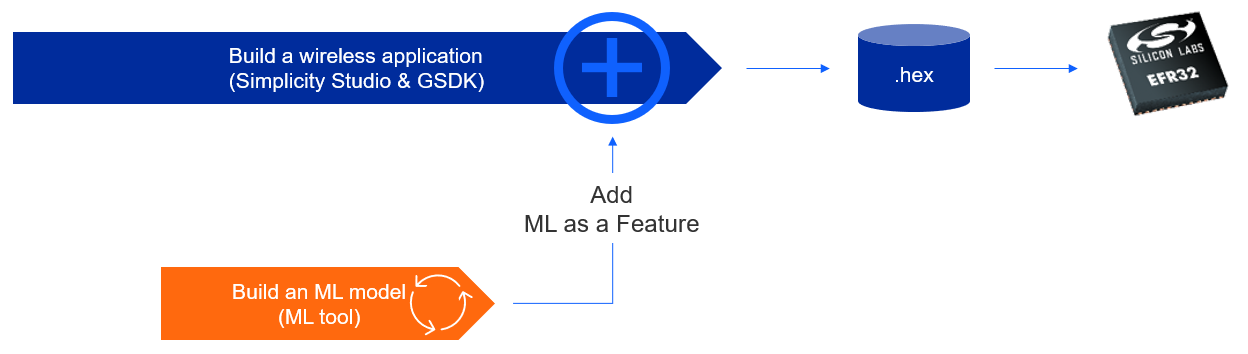

Developing an application that incorporates machine learning as a feature requires two distinct workflows:

The embedded application development workflow used to create a wireless application (with Simplicity Studio or your favorite IDE).

The machine learning workflow used to create the machine learning feature that will be added to the embedded application.

Familiarity with the standard embedded application development workflow is assumed. The tools presented on this page cover the various options for developing the machine learning feature.

Know Your Machine Learning Developer Skills#

We have defined three segments of developer skills expected when using an ML toolchain:

ML Expert : The developer is experienced with TensorFlow and Python. The Expert tools require that the developer is very proficient with these skills, or is willing to learn.

ML Explorer : The developer knows the basic machine learning concepts and workflow (data capture, training, testing & converting), but wants a curated approach that steps the developer through all the steps needed to implement machine learning as a feature in their product.

ML Solutions : The developer wants to use machine learning technology but does not have any knowledge about machine learning nor is willing to learn. Instead, they want a black box solution they add as a value-added feature to their product.

Native TFLM Support for Experts#

For the ML Expert, Silicon Labs provides native TensorFlow Lite for Microcontrollers (TFLM) support. There are two implementation aspects to consider when using these tools:

Training a model. For training there are two options to consider: use TensorFlow directly, or use the Silicon Labs Machine Learning Toolkit MLTK.

Integrating the model. Integrating a

.tflitemodel file into the embedded application and running inference. The getting started sections below refer to examples that can help the developer with integration.

Training a Model with TensorFlow#

The GSDK supports TensorFlow Lite for Microcontrollers (TFLM) natively. The developer can create a quantized

.tflite model file using the TensorFlow environment directly and integrate it into the GDSK.

Note:

Use the MLTK Profiler Utility to determine the RAM and Flash requirements of your

.tflitemodel file to confirm the fit within your embedded application.

Getting Started

Developing a Model Using TensorFlow and Keras (docs.silabs.com)

Training a Model with the Silicon Labs MLTK#

The Machine Learning Toolkit (MLTK) was created for these reasons to help the Tensorflow developer. It is a set of python scripts designed to follow the typical machine learning workflow for Silicon Labs embedded devices.

Note:

This package is made available as an open source, self-serve, community supported, reference package with a comprehensive set of on-line documentation. There are no Silicon Labs support services for this software at this time.

These scripts are a reference implementations for the use case covered by the documented tutorials. Support for other use cases is the responsibility of the Expert developer.

Getting Started

Create a Keyword Spotting Model for a GSDK example. (GitHub.io) - MLTK Tutorial

Integrating the Model#

The trained model, whether using TensorFlow directly or the MLTK, is represented in a .tflite file. To add the .tflite file to your embedded application see developing an inference application.

There are several GSDK examples that include machine learning and show how to run inference using pre-trained models. The pre-trained models can be replaced with a model trained from one of the methods above.

Getting Started

Start with any one of these Sample Applications that are provided with the GSDK.

Third Party Partner Toolchains for Explorers#

For the ML Explorer, these tools curate the end-to-end workflow for creating a machine learning application specific model and accompanying embedded software to include in your application. They offer:

An end-to-end coverage of the machine learning workflow

An easy-to-follow developer experience

Extra value added features like AutoML, or object detection

Requires:

Understanding of machine learning concepts (date collection, training, verification, inference, confusion matrix, etc.).

Ability to collect data that represents the real world scenarios for your product deployment.

The following tools fit well with developing ML as a feature for a Silicon Labs-based embedded application.

Edge Impulse#

Edge Impulse is ushering in the future of embedded machine learning by empowering developers to create and optimize solutions with real-world data. They are making the process of building, deploying, and scaling embedded ML applications easier and faster than ever, unlocking massive value across every industry, with millions of developers making billions of devices smarter.

Getting Started

Using Edge Impulse Studio with the xG24 Dev Kit

SensiML#

SensiML offers cutting edge AutoML embedded code generation software for implementing AI at the IoT edge. With SensiML Analytics Toolkit, developers have an AI development tool which supports rapid data collection, labeling, feature extraction, ML classification and optimized firmware code generation. Automation built into the tool drastically reduces development time and cost, allowing projects ranging from single users to large teams to generate optimized edge AI sensor algorithms in a fraction of the time that would have otherwise been required with hand coding.

Getting Started

Using SensiML Analytics Toolkit with the xG24 Dev Kit

Third Party Partner Solutions#

These tools do not require any knowledge of machine learning to take full advantage of their features. They are designed for specific use cases.

Anomaly Detection#

MicroAI's AtomML is an Edge-native, self-correcting, semi-supervised learning engine that aggregates data from internal device sensors, to tune itself to create a behavioral profile of the asset, which then detects and acts upon abnormal behavior. AtomML brings big infrastructure intelligence into a single piece of equipment or device. Unlike traditional AI-driven asset management solutions that rely on the cloud, AtomML is deployed directly to smart devices and sensors. AtomML operates within the small environment of the device itself, providing a more efficient method for asset analytics and generating real-time alerts. AtomML brings an intimate, local approach to asset management for producing a host of operational efficiencies.

Getting Started

Using MicroAI AtomML with the Thunderboard Sense 2

Wake-word/Voice Commands#

Sensory creates a safer and superior UX through vision and voice technologies. Sensory technologies are widely deployed in consumer electronics applications including mobile phones, automotive, wearables, toys, IoT, PCs, medical products, and various home electronics. Sensory’s product line includes TrulyHandsfree voice control, TrulySecure biometric authentication, and Trul-yNatural large vocabulary natural language embedded speech recognition. Sensory’s technologies have shipped in over three bil-lion units of leading consumer products.

Getting Started

Using Sensory Truly Hands Free with the xG24 Dev Kit

Choosing a Machine Learning Tool Based on Use Case#

This section provides getting started links for tools available or suggested, based on use case. The links provided may be any one of and an example, demo, or tutorial.

Sensor Signal Processing#

Sensor signal processing is the use of low data rate sensors, like accelerometers, gyroscopes, air quality sensors, temperature sensors, pressure sensors, and so on. These use cases can be found in many types of markets, such as preventive maintenance, medical devices or smoke detectors.

TensorFlow Magic Wand Example - GSDK Platform Example

Predictive Maintenance Demo - SensiML Demo (video 5 min)

Build the Predictive Maintenance Demo - SensiML Tutorial (video 80 min)

Air Quality Anomaly Detection Demo - Micro.ai Demo (video 1.5 min)

Audio Pattern Matching#

Audio pattern matching uses a microphone to detect different sounds. The types of sounds detected can be a wide range of non-speech related sounds, such as squeaky bearing, jingling keys, breaking glass, water running, animal sounds, and so on.

Creating an Acoustic Smart Home Door Lock Demo - SensiML Tutorial (5 part blog)

Introduction to Building an Audio Classifier Demo - Edge Impulse Tutorial Introduction (video 10 min)

Building and Audio Classifier Demo - Edge Impulse Tutorial (video 70 min)

Audio classifier Example - MLTK Example

Voice Command#

Voice commands is a specific sub-set of audio patterns that are the recognition of a small set of spoken words. This is also referred to as keyword spotting. It can be used in a variety of use cases, such as waking up a voice service (i.e., Alexa), or using voice activation for smart home devices or appliances.

Zigbee Voice Controlled Light Switch Example - GSDK Zigbee Example

Voice Controlled LED Example - GSDK Platform Example

Create a Voice Command Model for a GSDK Example - MLTK Tutorial

This tutorial can be used for either example above to create a model (i.e.,

.tflitefile).

Building a Voice Recognition Demo - Edge Impulse Tutorial (video 90 min)

Voice Command/Wake-word Example - Sensory Truly Hands Free Example

Low-Resolution Vision#

Low resolution vision uses a camera with resolution around 100x100 to detect objects for presence detection, people counting, video wake-up or more.

People Flow and Counting Example - GSDK Platform Example

People Counting Demo - Edge Impulse Example

Build a Rock, Paper, Scissors Detector Demo MLTK Tutorial

Build a Fingerprint Authenticator Demo MLTK Tutorial

Summary of Machine Learning Tools#

Below is a table that summarizes our different tool options organized by developer skills and target use cases