Image Classifier#

Image classification is one of the most important applications of deep learning and Artificial Intelligence. Image classification refers to assigning labels to images based on certain characteristics or features present in them. The algorithm identifies these features and uses them to differentiate between different images and assign labels to them.

This application uses TensorFlow Lite for Microcontrollers to run image classification machine learning models to classify hand gestures from image data captured from ArduCAM camera. The detection is visualized using the LED's on the board and the classification results are written to the VCOM serial port.

This sample application uses the Flatbuffer Converter Tool to add the .tflite file to the application binary.

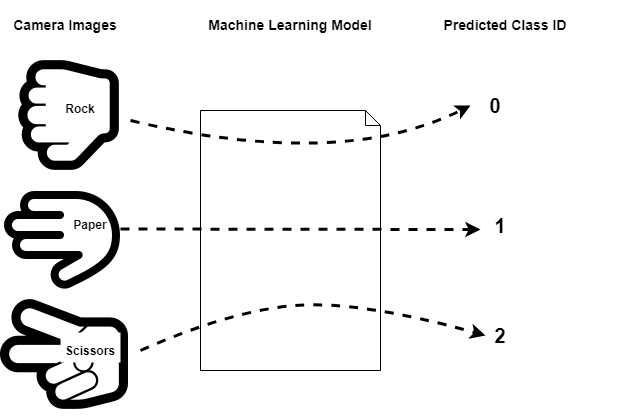

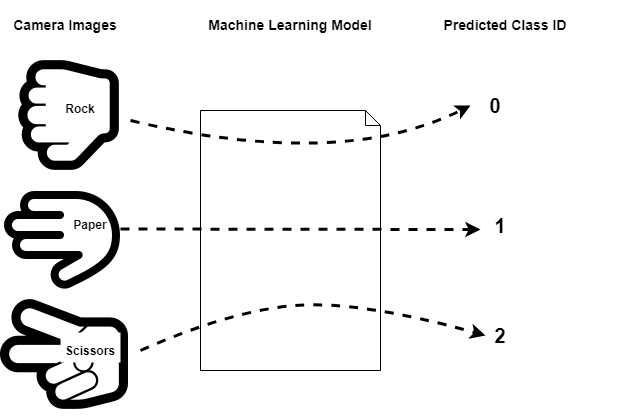

Class Labels#

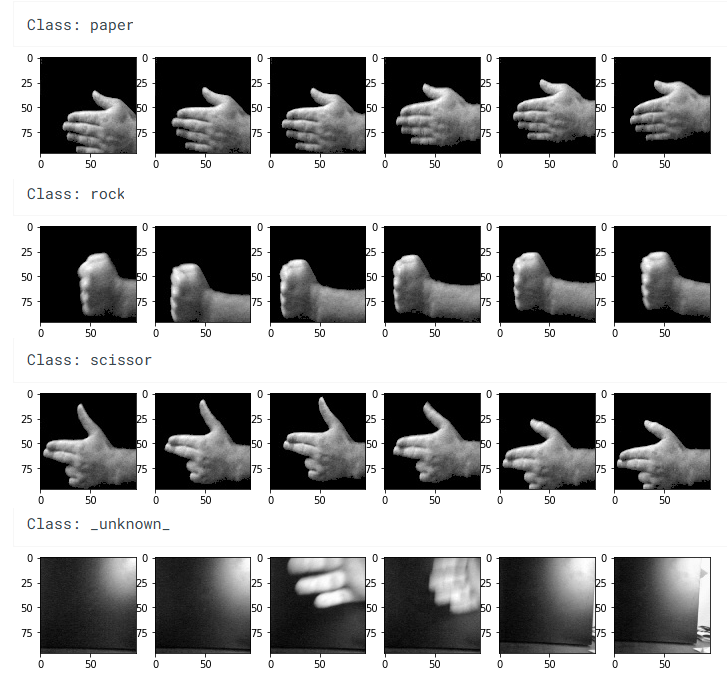

rock: Images of a person’s hand making a “rock” gesture

paper: Images of a person’s hand making a “paper” gesture

scissors: Images of a person’s hand making a “scissors” gesture

unknown: Random images not containing any of the above

Following figure describes class labels with respect to hand pose.

Required Hardware and Setup#

Silicon Labs EFR series boards BRD2601B or BRD2608A.

Berg Strip Connectors with Jumper wires ( minimum 8 count) any one of following combination.

Male-Berg strip with female to female jumper wires.

Female-Berg strip with male to female jumper wires.

ArduCAM camera module.

Pin Configuration#

Following table shows pin connections between ArduCAM and Development kit.

ArduCAM Pin | Board Expansion Header Pin |

|---|---|

GND | 1 |

VCC | 18 |

CS | 10 |

MOSI | 4 |

MISO | 6 |

SCK | 8 |

SDA | 16 |

SCL | 15 |

Required Software#

Simplicity studio v5 with

simplicity_sdk

aiml-extension

Dumping Images to PC#

This application uses JLink to stream image data to a Python script located at aiml-extension/tools/image-visualization. To get started, refer to the instructions provided in the readme.md file. Once the application binary is flashed onto the development board, you can launch the visualization tool to view incoming image streams and optionally save them to your local PC.

Application Notes#

Camera Input: This application utilizes images captured using an ArduCAM camera.

Lighting Conditions: The model was trained on well-lit images. To achieve optimal results, conduct experiments in good lighting. Adjust the SL_ML_IMAGE_MEAN_THRESHOLD parameter in the .slcp configuration file to set the minimum mean intensity required for image processing.

Recommended Distance: Maintain approximately 0.5 meters between the camera and the subject’s hand during experimentation.

Background Setup: Use a plain background (preferably white or black) to improve detection accuracy and consistency.

Convolutional Neural Networks#

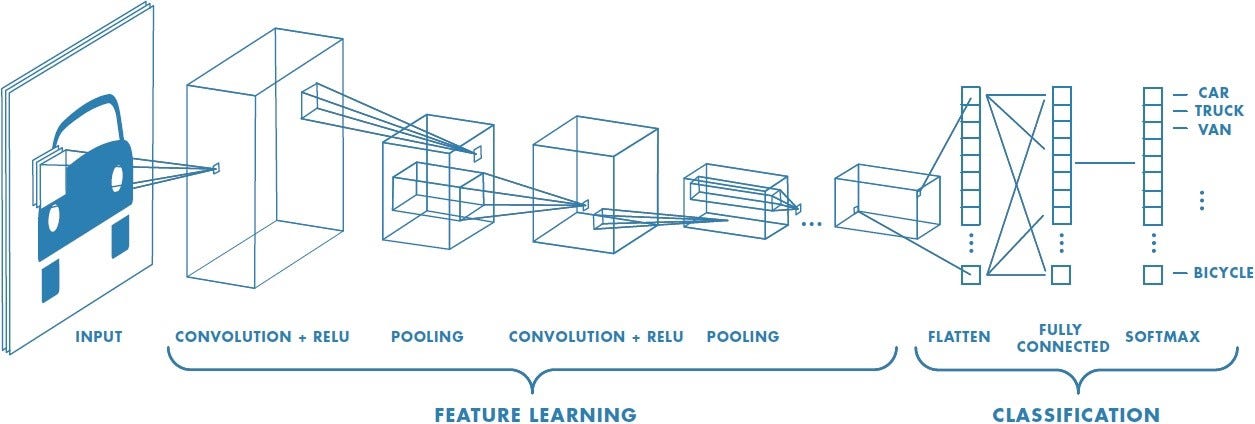

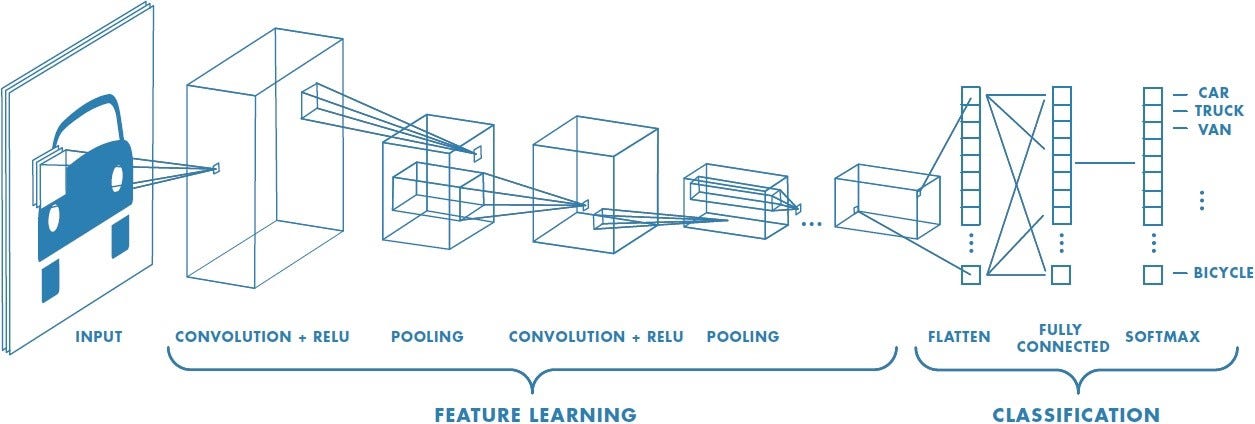

The type of machine learning model used in this application is Convolutional Neural Network (CNN).

A Convolutional Neural Network (ConvNet/CNN) is a Deep Learning algorithm which can take in an input image, assign importance (learnable weights and biases) to various aspects/objects in the image and be able to differentiate one from the other.

A typical CNN can be visualized as follows:

A typical CNN is comprised of multiple layers. A given layer is basically a mathematical operation that operates on multi-dimensional arrays (a.k.a tensors). The layers of a CNN can be split into two core phases:

Feature Learning: This uses Convolutional layers to extract “features” from the input image

Classification: This takes the flatten “feature vector” from the feature learning layers and uses “fully connected” layer(s) to make a prediction on which class the input image belongs

Deep Learning Pipeline#

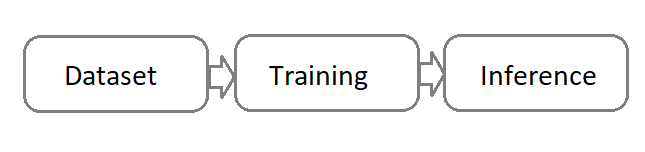

A deep learning pipeline typically consists of three primary stages: dataset collection, model training, and inference as follows.

Some sample data used for training the model in this application is illustrated in the figure below.

Data Preprocessing#

The preprocessing stage involves specific data normalization settings, managed by the ml_image_feature_generation component.

samplewise_center = True samplewise_std_normalization = True

norm_img = (img - mean(img)) / std(img)

These settings normalize each input image individually using the formula. This helps to ensure the model is not as dependent on camera and lighting variations.

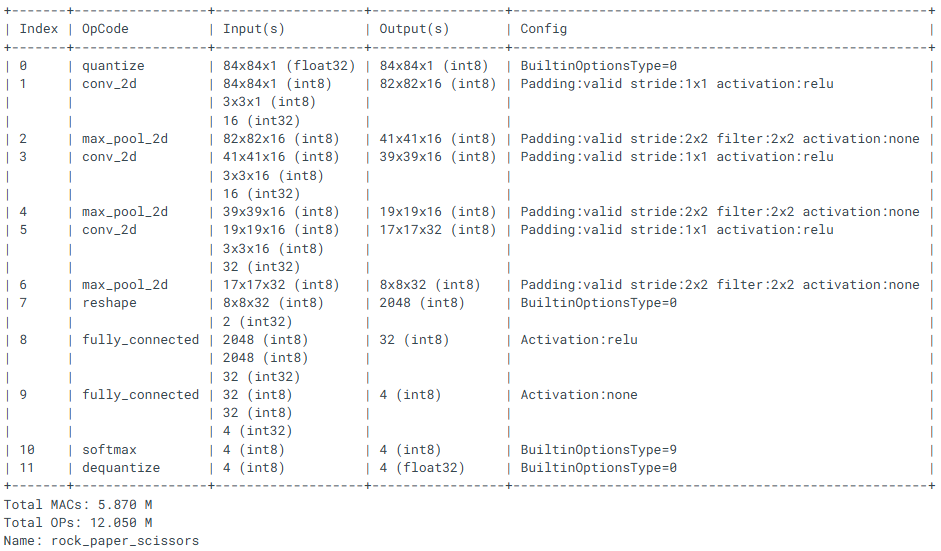

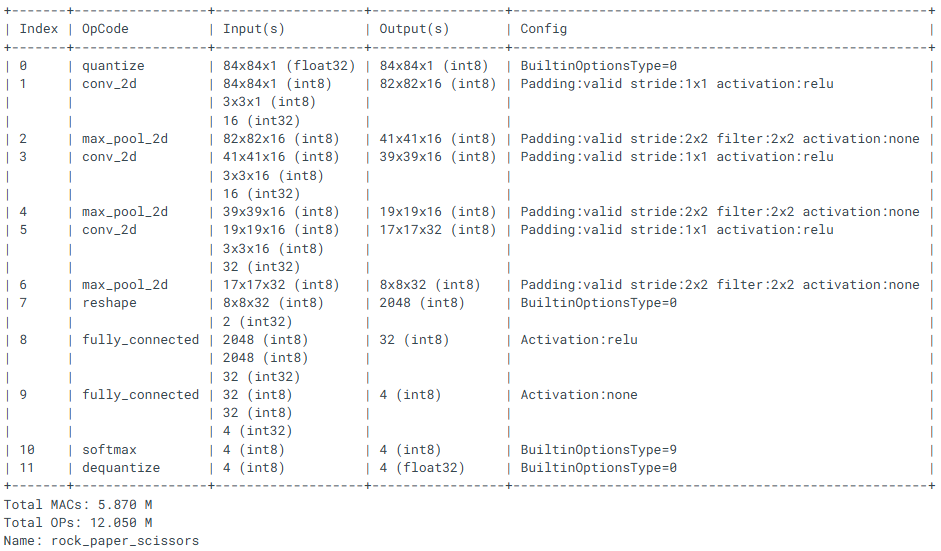

Model Details#

The model summary presents detailed information about each layer along with the number of parameters involved. This breakdown outlines the architecture used to perform image classification task.

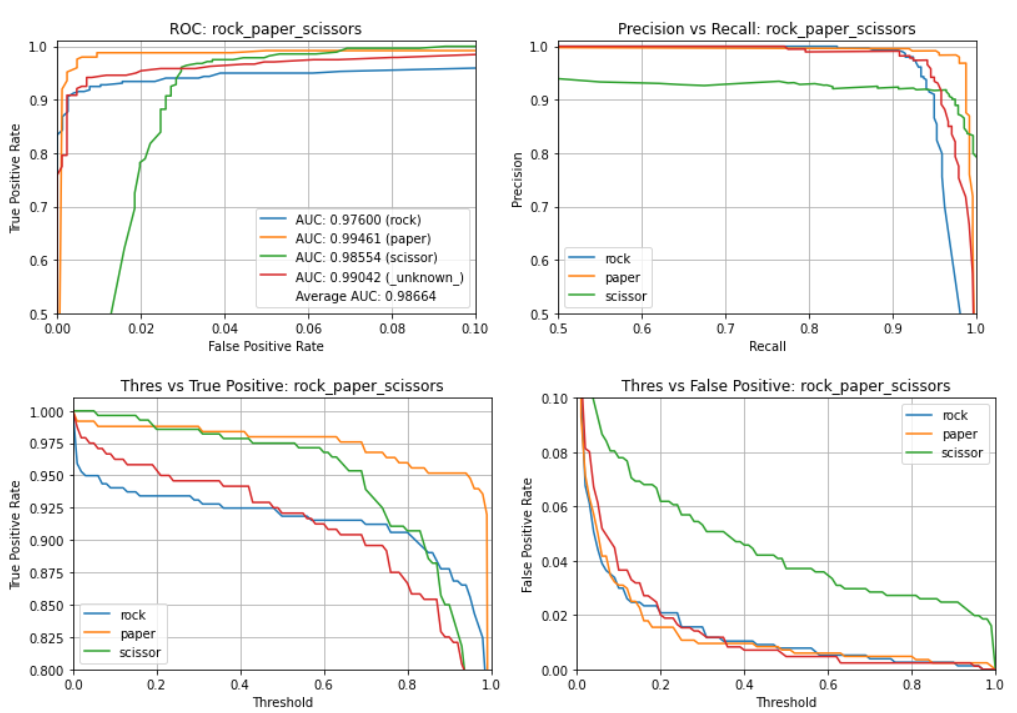

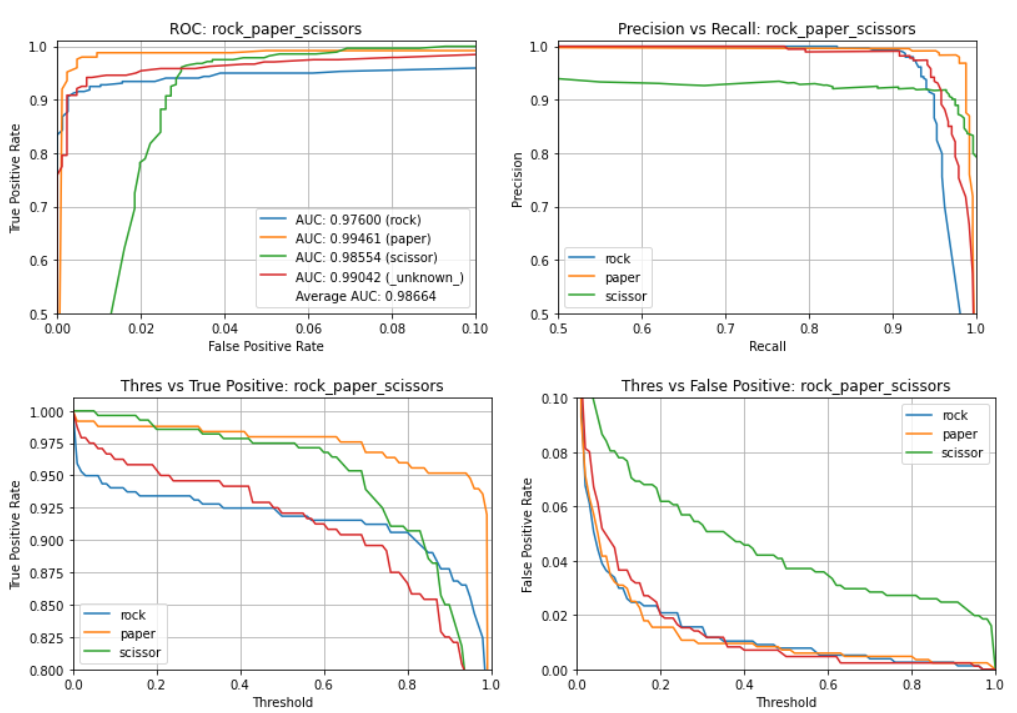

Model Evaluation#

The fundamental approach to model evaluation involves feeding test samples—new, unseen data not used during training—into the model and comparing its predictions against the actual expected values. If every prediction is correct, the model achieves 100% accuracy; each incorrect prediction lowers the overall accuracy.

The figure below illustrates key performance indicators (KPIs) achieved using the Rock-Paper-Scissors dataset, including:

Accuracy: Proportion of correct predictions over total predictions ROC Curve: Graphical representation of true positive rate vs. false positive rate Recall: Measure of the model’s ability to identify all relevant instances Precision: Proportion of true positives among all predicted positives

Overall accuracy: 95.037% Class accuracies:

paper = 98.394%

scissor = 97.500%

rock = 92.476%

unknown = 92.083% Average ROC AUC: 98.664% Class ROC AUC:

paper = 99.461%

unknown = 99.042%

scissor = 98.554%

rock = 97.600%